Data Preprocessing in Python using Scikit Learn

What is Data Preprocessing?

Data Preprocessing is a technique that is used to convert the raw data into a clean data set. In other words, whenever the data is gathered from different sources it is collected in raw format which is not feasible for the analysis.

Therefore, certain steps are executed to convert the data into a small clean data set. This technique is performed before the execution of the Iterative Analysis. The set of steps is known as Data Preprocessing. It includes –- Data Cleaning

- Data Integration

- Data Transformation

- Data Reduction

Data Preprocessing is a technique that is used to convert the raw data into a clean data set. In other words, whenever the data is gathered from different sources it is collected in raw format which is not feasible for the analysis.

Therefore, certain steps are executed to convert the data into a small clean data set. This technique is performed before the execution of the Iterative Analysis. The set of steps is known as Data Preprocessing. It includes –

- Data Cleaning

- Data Integration

- Data Transformation

- Data Reduction

Need of Data Preprocessing

The format of the data must be in a proper way to obtain better outcomes from the implemented model in Machine Learning and Deep Learning projects, this is where the Data Preparation is used.

Some specified Machine Learning and Deep Learning model need information in a specified format, for example, Random Forest algorithm does not support null values, therefore to execute random forest algorithm null values has to be managed from the original raw data set.

The format of the data must be in a proper way to obtain better outcomes from the implemented model in Machine Learning and Deep Learning projects, this is where the Data Preparation is used.

Some specified Machine Learning and Deep Learning model need information in a specified format, for example, Random Forest algorithm does not support null values, therefore to execute random forest algorithm null values has to be managed from the original raw data set.

Various data pre-processing techniques:

Standardization:Data standardization is the method by which one or more attributes are rescaled such that they have a mean value of 0 and a standard deviation of 1.

Normalization:The aim of normalization is to adjust the numeric column values to a standard scale in the dataset, without distorting the variations in the value ranges.

One-hot Encoding:One hot encoding is a process that transforms categorical data into a type that could be given to ML algorithms to do a better prediction job. It only accepts numerical information as an input. So, by using Label Encoder, the categorical data that needs to be encoded is transformed into a numerical form.

Discretization:Discretization refers to the method of converting or partitioning discretized or nominal attributes / features / variables / intervals from continuous attributes, features or variables.

Imputation:For missing data, the imputation technique develops fair guesses. When the amount of missing data is tiny, it's most beneficial. If the portion of missing information is too large, there is no natural variance in the results that could result in an efficient model.

Standardization:

Data standardization is the method by which one or more attributes are rescaled such that they have a mean value of 0 and a standard deviation of 1.

Normalization:

The aim of normalization is to adjust the numeric column values to a standard scale in the dataset, without distorting the variations in the value ranges.

One-hot Encoding:

One hot encoding is a process that transforms categorical data into a type that could be given to ML algorithms to do a better prediction job. It only accepts numerical information as an input. So, by using Label Encoder, the categorical data that needs to be encoded is transformed into a numerical form.

Discretization:

Discretization refers to the method of converting or partitioning discretized or nominal attributes / features / variables / intervals from continuous attributes, features or variables.

Imputation:

For missing data, the imputation technique develops fair guesses. When the amount of missing data is tiny, it's most beneficial. If the portion of missing information is too large, there is no natural variance in the results that could result in an efficient model.

What is Scikit Learn?

Scikit-learn is a Python library that provides a broad range of algorithms for supervised and unsupervised learning.

Scikit Learn is built on top of many Python libraries of common data and math. Such a design makes the integration between them all super simple. You can transfer numpy arrays and pandas data frames straight to Scikit's ML algorithms. It uses the following libraries

Scikit-learn is a Python library that provides a broad range of algorithms for supervised and unsupervised learning.

Scikit Learn is built on top of many Python libraries of common data and math. Such a design makes the integration between them all super simple. You can transfer numpy arrays and pandas data frames straight to Scikit's ML algorithms. It uses the following libraries

Let's start digging!!

1. First of all download your own dataset with many numerical features with different range value to see the effect of data preprocessing. Here, I am using this dataset.

2. Importing required packages.

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import MinMaxScaler

from sklearn.neighbors import KNeighborsClassifier

from sklearn.metrics import accuracy_score

from sklearn.preprocessing import scale

from sklearn.linear_model import LogisticRegression

from sklearn.preprocessing import LabelEncoder

from sklearn.preprocessing import OneHotEncoder3. Importing datasetdf=pd.read_csv('data.csv',na_values=['?'])

df

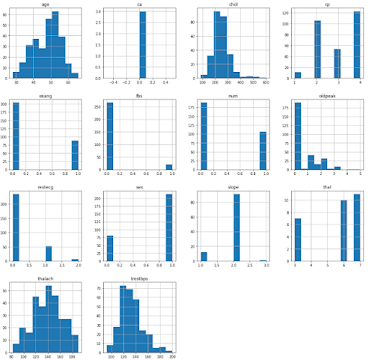

4. Data Explorationdf.hist(figsize=[18,18])

5. Data Cleaningdf.fillna(df.median(),inplace=True)

df = df.drop_duplicates()6. Creating training and test datasetx=df[['age', 'sex', 'cp', 'trestbps', 'chol', 'fbs', 'restecg', 'thalach','exang', 'oldpeak', 'slope', 'thal']]

y = df['num']

X_train,X_test,Y_train,Y_test = train_test_split(df,y,test_size=0.2)7. Applying KNN model before preprocessing.knn=KNeighborsClassifier(n_neighbors=5)

knn.fit(X_train,Y_train)

accuracy_score(Y_test,knn.predict(X_test)) accuracy_score= 0.7288135593220338

8. Now applying feature scaling.min_max=MinMaxScaler()

X_train_minmax=min_max.fit_transform(X_train[['trestbps','chol','thalach']])

X_test_minmax=min_max.fit_transform(X_test[['trestbps','chol','thalach']])9. Applying KNN model after feature scaling.del knn

knn=KNeighborsClassifier(n_neighbors=5)

knn.fit(X_train_minmax,Y_train)accuracy_score(Y_test,knn.predict(X_test_minmax)) accuracy_score= 0.7457627118644068 (2% increase)

10. Applying Feature StandardizationX_train_scale = scale(X_train[['trestbps','chol','thalach']])

X_test_scale = scale(X_test[['trestbps','chol','thalach']])

log=LogisticRegression()

log.fit(X_train_scale,Y_train)

accuracy_score(Y_test,log.predict(X_test_scale)) accuracy_score= 0.6949152542372882 (3% decrease)

11. Applying One-Hot Encodingenc=OneHotEncoder(sparse=False)

X_train_1=X_train

X_test_1=X_test

columns=['sex', 'cp','fbs', 'restecg', 'exang', 'oldpeak', 'slope', 'thal']

for col in columns:

data=X_train[[col]].append(X_test[[col]])

enc.fit(data)

# Fitting One Hot Encoding on train data

temp = enc.transform(X_train[[col]])

# Changing the encoded features into a data frame with new column names

temp=pd.DataFrame(temp,columns=[(col+"_"+str(i)) for i in data[col].value_counts().index])

# In side by side concatenation index values should be same

# Setting the index values similar to the X_train data frame

temp=temp.set_index(X_train.index.values)

# adding the new One Hot Encoded varibales to the train data frame

X_train_1=pd.concat([X_train_1,temp],axis=1)

# fitting One Hot Encoding on test data

temp = enc.transform(X_test[[col]])

# changing it into data frame and adding column names

temp=pd.DataFrame(temp,columns=[(col+"_"+str(i)) for i in data[col].value_counts().index])

# Setting the index for proper concatenation

temp=temp.set_index(X_test.index.values)

# adding the new One Hot Encoded varibales to test data frame

X_test_1=pd.concat([X_test_1,temp],axis=1)

X_train_1.columns

X_train_scale=scale(X_train_1)

X_test_scale=scale(X_test_1)

log.fit(X_train_scale,Y_train)

accuracy_score(Y_test,log.predict(X_test_scale)) accuracy_score = 1.0 (28% increase)

1. First of all download your own dataset with many numerical features with different range value to see the effect of data preprocessing. Here, I am using this dataset.

2. Importing required packages.

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import MinMaxScaler

from sklearn.neighbors import KNeighborsClassifier

from sklearn.metrics import accuracy_score

from sklearn.preprocessing import scale

from sklearn.linear_model import LogisticRegression

from sklearn.preprocessing import LabelEncoder

from sklearn.preprocessing import OneHotEncoder3. Importing dataset

df=pd.read_csv('data.csv',na_values=['?'])

df4. Data Exploration

df.hist(figsize=[18,18])5. Data Cleaning

df.fillna(df.median(),inplace=True)

df = df.drop_duplicates()6. Creating training and test dataset

x=df[['age', 'sex', 'cp', 'trestbps', 'chol', 'fbs', 'restecg', 'thalach','exang', 'oldpeak', 'slope', 'thal']]

y = df['num']

X_train,X_test,Y_train,Y_test = train_test_split(df,y,test_size=0.2)7. Applying KNN model before preprocessing.

knn=KNeighborsClassifier(n_neighbors=5)

knn.fit(X_train,Y_train)

accuracy_score(Y_test,knn.predict(X_test)) accuracy_score= 0.7288135593220338

8. Now applying feature scaling.

min_max=MinMaxScaler()

X_train_minmax=min_max.fit_transform(X_train[['trestbps','chol','thalach']])

X_test_minmax=min_max.fit_transform(X_test[['trestbps','chol','thalach']])9. Applying KNN model after feature scaling.

del knn

knn=KNeighborsClassifier(n_neighbors=5)

knn.fit(X_train_minmax,Y_train)accuracy_score(Y_test,knn.predict(X_test_minmax)) accuracy_score= 0.7457627118644068 (2% increase)

10. Applying Feature Standardization

X_train_scale = scale(X_train[['trestbps','chol','thalach']])

X_test_scale = scale(X_test[['trestbps','chol','thalach']])

log=LogisticRegression()

log.fit(X_train_scale,Y_train)

accuracy_score(Y_test,log.predict(X_test_scale)) accuracy_score= 0.6949152542372882 (3% decrease)

11. Applying One-Hot Encoding

enc=OneHotEncoder(sparse=False)

X_train_1=X_train

X_test_1=X_test

columns=['sex', 'cp','fbs', 'restecg', 'exang', 'oldpeak', 'slope', 'thal']

for col in columns:

data=X_train[[col]].append(X_test[[col]])

enc.fit(data)

# Fitting One Hot Encoding on train data

temp = enc.transform(X_train[[col]])

# Changing the encoded features into a data frame with new column names

temp=pd.DataFrame(temp,columns=[(col+"_"+str(i)) for i in data[col].value_counts().index])

# In side by side concatenation index values should be same

# Setting the index values similar to the X_train data frame

temp=temp.set_index(X_train.index.values)

# adding the new One Hot Encoded varibales to the train data frame

X_train_1=pd.concat([X_train_1,temp],axis=1)

# fitting One Hot Encoding on test data

temp = enc.transform(X_test[[col]])

# changing it into data frame and adding column names

temp=pd.DataFrame(temp,columns=[(col+"_"+str(i)) for i in data[col].value_counts().index])

# Setting the index for proper concatenation

temp=temp.set_index(X_test.index.values)

# adding the new One Hot Encoded varibales to test data frame

X_test_1=pd.concat([X_test_1,temp],axis=1)

X_train_1.columnsX_train_scale=scale(X_train_1)

X_test_scale=scale(X_test_1)

log.fit(X_train_scale,Y_train)

accuracy_score(Y_test,log.predict(X_test_scale)) accuracy_score = 1.0 (28% increase)

From the above results we can see that applying different preprocessing technique produces different results.

Question and Answers

How to decide variance threshold in data reduction?The estimation of the variance threshold depends on a specific distribution's probability density function.

Does the output result same even after applying model on encoded data v\s original data?No, because most machine learning algorithms require numerical input and output variables. So, After applying one-hot encoding accuracy of ML algorithm increases.

Please like, Share and comment.

References:

How to decide variance threshold in data reduction?

The estimation of the variance threshold depends on a specific distribution's probability density function.

Does the output result same even after applying model on encoded data v\s original data?

No, because most machine learning algorithms require numerical input and output variables. So, After applying one-hot encoding accuracy of ML algorithm increases.

Please like, Share and comment.

References:

No comments:

Post a Comment